[ad_1]

This text is Half 3 of Ampere Computing’s Accelerating the Cloud collection. You’ll be able to learn Half 1 right here, and Half 2 right here.

As we confirmed in Half 2 of this collection, redeploying functions to a cloud native compute platform is usually a comparatively simple course of. For instance, Momento described their redeployment expertise as “meaningfully much less work than we anticipated. Pelikan labored immediately on the T2A (Google’s Ampere-based cloud native platform) and we used our current tuning processes to optimize it.”

In fact, functions will be complicated, with many parts and dependencies. The better the complexity, the extra points that may come up. From this angle, Momento’s redeployment expertise of Pelikan Cache to Ampere cloud native processors gives many insights. The corporate had a posh structure in place, and so they wished to automate the whole lot they might. The redeployment course of gave them a chance to attain this.

Purposes Appropriate for Cloud Native Processing

The primary consideration is to find out how your software can profit from redeployment on a cloud native compute platform. Most cloud functions are well-suited for cloud native processing. To know which functions can profit most from a cloud native strategy, we take a better take a look at the Ampere cloud native processor structure.

To attain larger processing effectivity and decrease energy dissipation, Ampere took a distinct strategy to designing our cores – we targeted on the precise compute wants of cloud native functions by way of efficiency, energy, and performance, and prevented integrating legacy processor performance that had been added for non-cloud use-cases. For instance, scalable vector extensions are helpful when an software has to course of plenty of 3D graphics or particular kinds of HPC processing, however include an influence and core density trade-off. For functions that require SVE like Android gaming within the cloud, a Cloud Service Supplier would possibly select to pair Ampere processors with GPUs to speed up 3D efficiency.

For cloud native workloads, the decreased energy consumption and elevated core density of Ampere cores implies that functions run with larger efficiency whereas consuming much less energy and dissipating much less warmth. In brief, a cloud native compute platform will seemingly present superior efficiency, better energy effectivity, and better compute density at a decrease working value for many functions.

The place Ampere excels is with microservice-based functions which have quite a few unbiased parts. Such functions can profit considerably from the supply of extra cores, and Ampere gives excessive core density of 128 cores on a single IC and as much as 256 cores in a 1U chassis with two sockets.

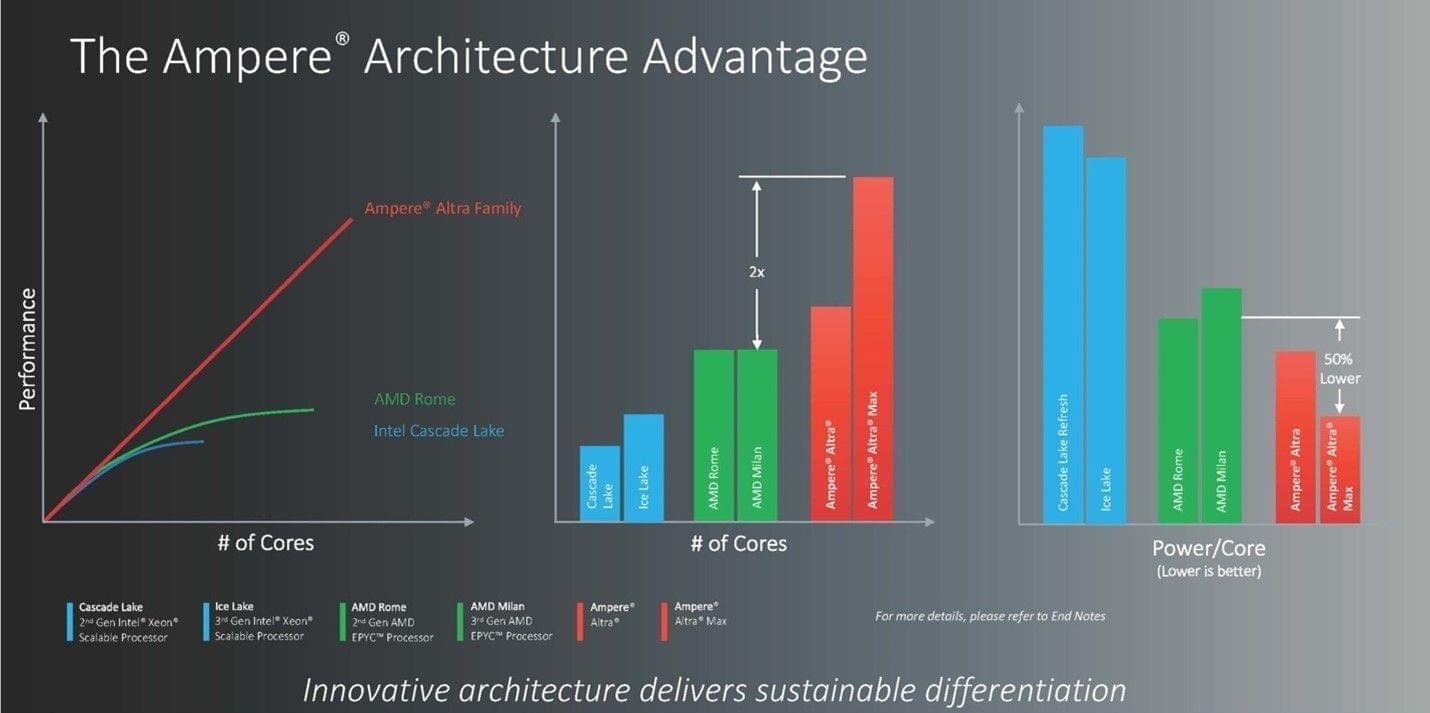

In actual fact, you may actually see the advantages of Ampere if you scale horizontally (i.e., load stability throughout many cases). As a result of Ampere scales linearly with load, every core you add gives a direct profit. Examine this to x86 architectures the place the advantage of every new core added shortly diminishes (see Determine 1).

Determine 1: As a result of Ampere scales linearly with load, every core added gives a direct profit. Examine this to x86 architectures the place the advantage of every added core shortly diminishes.

Proprietary Dependencies

A part of the problem in redeploying functions is figuring out proprietary dependencies. Wherever within the software program provide chain the place binary information or devoted x86-based packages are used would require consideration. Many of those dependencies will be positioned by looking for code with “x86” within the filename. The substitution course of is usually straightforward to finish: Substitute the x86 bundle with the suitable Arm ISA-based model or recompile the accessible bundle for the Ampere cloud native platform, if in case you have entry to the supply code.

Some dependencies supply efficiency issues however not purposeful issues. Think about a framework for machine studying that makes use of code optimized for an x86 platform. The framework will nonetheless run on a cloud native platform, simply not as effectively as it might on an x86-based platform. The repair is easy: Establish an equal model of the framework optimized for the Arm ISA, resembling these included in Ampere AI. Lastly, there are ecosystem dependencies. Some industrial software program your software relies upon upon, such because the Oracle database, might not be accessible as an Arm ISA-based model. If so, this may occasionally not but be an acceptable software to redeploy till such variations can be found. Workarounds for dependencies like this, resembling changing them with a cloud native-friendly different, is perhaps potential, however may require vital modifications to your software.

Some dependencies are outdoors of software code, resembling scripts (i.e., playbooks in Ansible, Recipes in Chef, and so forth). In case your scripts assume a selected bundle identify or structure, chances are you’ll want to alter them when deploying to a cloud native pc platform. Most modifications like this are simple, and an in depth evaluate of scripts will reveal most such points. Take care in adjusting for naming assumptions the event workforce could have made over time.

The fact is that these points are typically straightforward to take care of. You simply must be thorough in figuring out and coping with them. Nevertheless, earlier than evaluating the fee to deal with such dependencies, it is sensible to contemplate the idea of technical debt.

Technical Debt

Within the Forbes article, Technical Debt: A Laborious-to-Measure Impediment to Digital Transformation, technical debt is outlined as, “the buildup of comparatively fast fixes to programs, or heavy-but-misguided investments, which can be cash sinks in the long term.” Fast fixes hold programs going, however finally the technical debt accrued turns into too excessive to disregard. Over time, technical debt will increase the price of change in a software program system, in the identical means that limescale build-up in a espresso machine will finally degrade its efficiency.

For instance, when Momento redeployed Pelikan Cache to the Ampere cloud native processor, they’d logging and monitoring code in place that relied on open-source code that was 15 years previous. The code labored, so it was by no means up to date. Nevertheless, because the instruments modified over time, the code wanted to be recompiled. There was a specific amount of labor required to keep up backwards compatibility, creating dependencies on the previous code. Over time, all these dependencies add up. And in some unspecified time in the future, when sustaining these dependencies turns into too complicated and too expensive, you’ll need to transition to new code. The technical debt will get known as in, so to talk.

When redeploying functions to a cloud native compute platform, it’s necessary to grasp your present technical debt and the way it drives your choices. Years of sustaining and accommodating legacy code accumulates technical debt that makes redeployment extra complicated. Nevertheless, this isn’t a price of redeployment, per se. Even for those who determine to not redeploy to a different platform, sometime you’re going to need to make up for all these fast fixes and different choices to delay updating code. You simply haven’t needed to but.

How actual is technical debt? In accordance with a research by McKinsey (see Forbes article), 30% of CIOs within the research estimated that greater than 20% of their technical finances for brand spanking new merchandise was truly diverted to resolving points associated to technical debt.

Redeployment is a good alternative to handle a few of the technical debt functions have acquired over time. Imagining recovering a portion of the “20%” your organization diverts to resolving technical debt. Whereas this may add time to the redeployment course of, caring for technical debt has the longer-term good thing about lowering the complexity of managing and sustaining code. For instance, slightly than carry over dependencies, you may “reset” a lot of them by transitioning code to your present growth setting. It’s an funding that may pay quick dividends by simplifying your growth cycle.

Anton Akhtyamov, Product Supervisor at Plesk, describes his expertise with redeployment. “We had some limitations proper after the porting. Plesk is a giant platform the place a whole lot of extra modules/extensions will be put in. Some weren’t supported by Arm, resembling Dr. Net and Kaspersky Antivirus. Sure extensions weren’t accessible both. Nevertheless, nearly all of our extensions have been already supported utilizing packages rebuilt for Arm by distributors. We even have our personal backend code (primarily C++), however as we already beforehand tailored it from x86 to help x86-64, we simply rebuilt our packages with none essential points.”

For 2 extra examples of real-world redeployment to a cloud native platform, see Porting Takua to Arm and OpenMandriva on Ampere Altra.

In Half 4 of this collection, we’ll dive into what sort of outcomes you may anticipate when redeploying functions to a cloud native compute platform.

[ad_2]