[ad_1]

Bayesian optimization (BayesOpt) is a strong instrument broadly used for world optimization duties, reminiscent of hyperparameter tuning, protein engineering, artificial chemistry, robotic studying, and even baking cookies. BayesOpt is a good technique for these issues as a result of all of them contain optimizing black-box features which might be costly to judge. A black-box perform’s underlying mapping from inputs (configurations of the factor we need to optimize) to outputs (a measure of efficiency) is unknown. Nonetheless, we are able to try to grasp its inside workings by evaluating the perform for various mixtures of inputs. As a result of every analysis will be computationally costly, we have to discover the most effective inputs in as few evaluations as doable. BayesOpt works by repeatedly developing a surrogate mannequin of the black-box perform and strategically evaluating the perform on the most promising or informative enter location, given the knowledge noticed thus far.

Gaussian processes are in style surrogate fashions for BayesOpt as a result of they’re straightforward to make use of, will be up to date with new information, and supply a confidence stage about every of their predictions. The Gaussian course of mannequin constructs a likelihood distribution over doable features. This distribution is specified by a imply perform (what these doable features appear like on common) and a kernel perform (how a lot these features can range throughout inputs). The efficiency of BayesOpt will depend on whether or not the boldness intervals predicted by the surrogate mannequin include the black-box perform. Historically, consultants use area information to quantitatively outline the imply and kernel parameters (e.g., the vary or smoothness of the black-box perform) to precise their expectations about what the black-box perform ought to appear like. Nonetheless, for a lot of real-world functions like hyperparameter tuning, it is extremely obscure the landscapes of the tuning targets. Even for consultants with related expertise, it may be difficult to slender down applicable mannequin parameters.

In “Pre-trained Gaussian processes for Bayesian optimization”, we think about the problem of hyperparameter optimization for deep neural networks utilizing BayesOpt. We suggest Hyper BayesOpt (HyperBO), a extremely customizable interface with an algorithm that removes the necessity for quantifying mannequin parameters for Gaussian processes in BayesOpt. For brand spanking new optimization issues, consultants can merely choose earlier duties which might be related to the present job they’re attempting to unravel. HyperBO pre-trains a Gaussian course of mannequin on information from these chosen duties, and robotically defines the mannequin parameters earlier than working BayesOpt. HyperBO enjoys theoretical ensures on the alignment between the pre-trained mannequin and the bottom reality, in addition to the standard of its options for black-box optimization. We share robust outcomes of HyperBO each on our new tuning benchmarks for close to–state-of-the-art deep studying fashions and traditional multi-task black-box optimization benchmarks (HPO-B). We additionally exhibit that HyperBO is strong to the choice of related duties and has low necessities on the quantity of knowledge and duties for pre-training.

Loss features for pre-training

We pre-train a Gaussian course of mannequin by minimizing the Kullback–Leibler divergence (a generally used divergence) between the bottom reality mannequin and the pre-trained mannequin. For the reason that floor reality mannequin is unknown, we can not immediately compute this loss perform. To unravel for this, we introduce two data-driven approximations: (1) Empirical Kullback–Leibler divergence (EKL), which is the divergence between an empirical estimate of the bottom reality mannequin and the pre-trained mannequin; (2) Unfavorable log chance (NLL), which is the the sum of damaging log likelihoods of the pre-trained mannequin for all coaching features. The computational price of EKL or NLL scales linearly with the variety of coaching features. Furthermore, stochastic gradient–primarily based strategies like Adam will be employed to optimize the loss features, which additional lowers the price of computation. In well-controlled environments, optimizing EKL and NLL result in the identical outcome, however their optimization landscapes will be very totally different. For instance, within the easiest case the place the perform solely has one doable enter, its Gaussian course of mannequin turns into a Gaussian distribution, described by the imply (m) and variance (s). Therefore the loss perform solely has these two parameters, m and s, and we are able to visualize EKL and NLL as follows:

Pre-training improves Bayesian optimization

Within the BayesOpt algorithm, choices on the place to judge the black-box perform are made iteratively. The choice standards are primarily based on the boldness ranges supplied by the Gaussian course of, that are up to date in every iteration by conditioning on earlier information factors acquired by BayesOpt. Intuitively, the up to date confidence ranges must be excellent: not overly assured or too not sure, since in both of those two circumstances, BayesOpt can not make the choices that may match what an professional would do.

In HyperBO, we exchange the hand-specified mannequin in conventional BayesOpt with the pre-trained Gaussian course of. Beneath gentle circumstances and with sufficient coaching features, we are able to mathematically confirm good theoretical properties of HyperBO: (1) Alignment: the pre-trained Gaussian course of ensures to be near the bottom reality mannequin when each are conditioned on noticed information factors; (2) Optimality: HyperBO ensures to discover a near-optimal resolution to the black-box optimization downside for any features distributed based on the unknown floor reality Gaussian course of.

|

| We visualize the Gaussian course of (areas shaded in purple are 95% and 99% confidence intervals) conditional on observations (black dots) from an unknown take a look at perform (orange line). In comparison with the normal BayesOpt with out pre-training, the expected confidence ranges in HyperBO captures the unknown take a look at perform a lot better, which is a crucial prerequisite for Bayesian optimization. |

Empirically, to outline the construction of pre-trained Gaussian processes, we select to make use of very expressive imply features modeled by neural networks, and apply well-defined kernel features on inputs encoded to the next dimensional area with neural networks.

To guage HyperBO on difficult and life like black-box optimization issues, we created the PD1 benchmark, which accommodates a dataset for multi-task hyperparameter optimization for deep neural networks. PD1 was developed by coaching tens of 1000’s of configurations of close to–state-of-the-art deep studying fashions on in style picture and textual content datasets, in addition to a protein sequence dataset. PD1 accommodates roughly 50,000 hyperparameter evaluations from 24 totally different duties (e.g., tuning Extensive ResNet on CIFAR100) with roughly 12,000 machine days of computation.

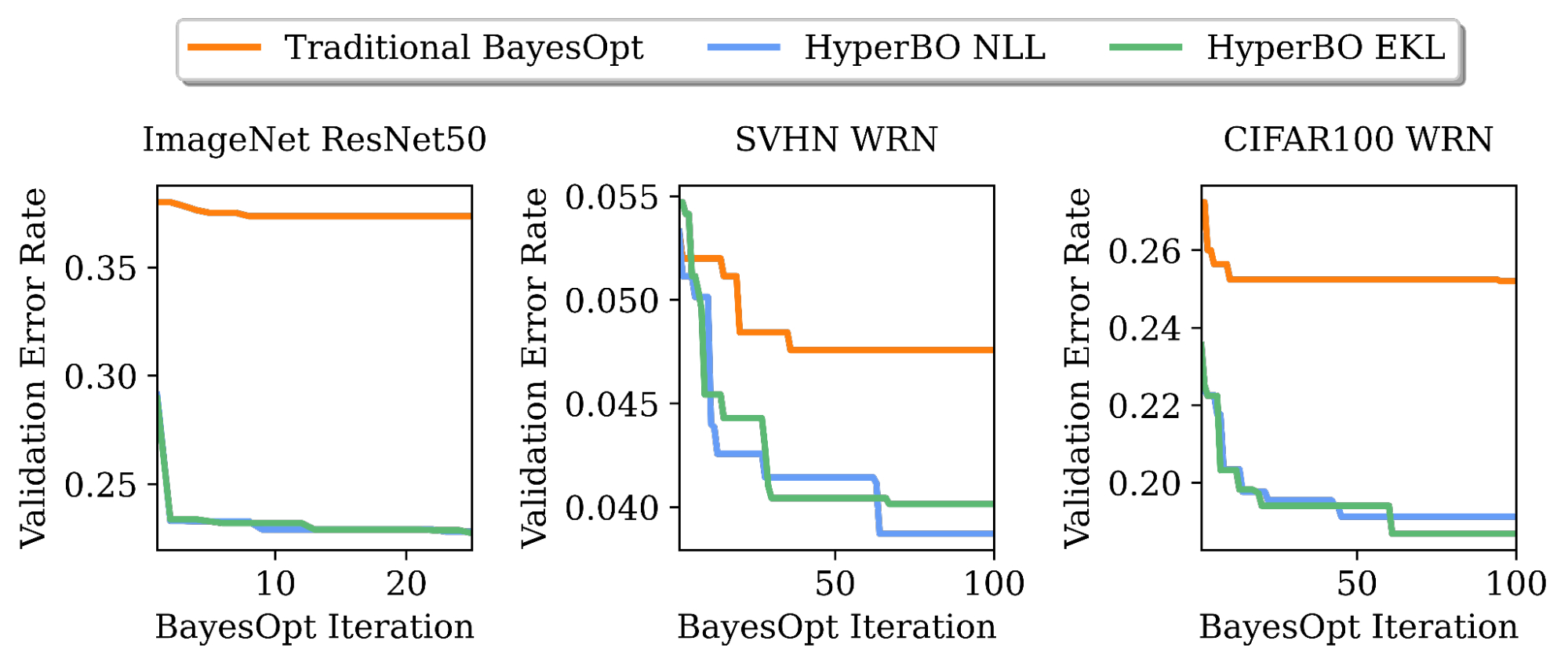

We exhibit that when pre-training for just a few hours on a single CPU, HyperBO can considerably outperform BayesOpt with rigorously hand-tuned fashions on unseen difficult duties, together with tuning ResNet50 on ImageNet. Even with solely ~100 information factors per coaching perform, HyperBO can carry out competitively towards baselines.

|

| Tuning validation error charges of ResNet50 on ImageNet and Extensive ResNet (WRN) on the Avenue View Home Numbers (SVHN) dataset and CIFAR100. By pre-training on solely ~20 duties and ~100 information factors per job, HyperBO can considerably outperform conventional BayesOpt (with a rigorously hand-tuned Gaussian course of) on beforehand unseen duties. |

Conclusion and future work

HyperBO is a framework that pre-trains a Gaussian course of and subsequently performs Bayesian optimization with a pre-trained mannequin. With HyperBO, we now not should hand-specify the precise quantitative parameters in a Gaussian course of. As an alternative, we solely must determine associated duties and their corresponding information for pre-training. This makes BayesOpt each extra accessible and simpler. An essential future route is to allow HyperBO to generalize over heterogeneous search areas, for which we’re creating new algorithms by pre-training a hierarchical probabilistic mannequin.

Acknowledgements

The next members of the Google Analysis Mind Group carried out this analysis: Zi Wang, George E. Dahl, Kevin Swersky, Chansoo Lee, Zachary Nado, Justin Gilmer, Jasper Snoek, and Zoubin Ghahramani. We might prefer to thank Zelda Mariet and Matthias Feurer for assist and session on switch studying baselines. We might additionally prefer to thank Rif A. Saurous for constructive suggestions, and Rodolphe Jenatton and David Belanger for suggestions on earlier variations of the manuscript. As well as, we thank Sharat Chikkerur, Ben Adlam, Balaji Lakshminarayanan, Fei Sha and Eytan Bakshy for feedback, and Setareh Ariafar and Alexander Terenin for conversations on animation. Lastly, we thank Tom Small for designing the animation for this put up.

[ad_2]